6 Creating and Printing 3D Models with LiDAR Data

Summary

In this project, we integrated Light Detection and Ranging (LiDAR) sensors, Virtual Reality Visualization and 3D Printings in the IA 340 Data Mining and IA 342 Data Visualization courses. Students learned how to create 3D models from 3D scanners, visualize 3D models in VR, and print out the created 3D models with 3D printers.

Rationale

LiDAR data is widely used in 3D modeling [1] and visualizations [2]. Collected LiDAR data contains the x, y, and z coordinates of targeting objects, and can be converted to 3D models that can be printed out with 3D printers.

This project can teach students in Geography (GS), Intelligence Analysis (IA), Computer Science (CS) or any other interested students the basic concept and practice of utilizing LiDAR data to create 3D models. The project also introduced methods and techniques in modifying 3D models and help students print out their created 3D models in JMU 3Space Classroom.

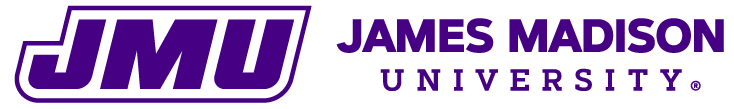

The School of Integrated Science has purchased ten units of 2D Sweep LiDAR Sensors [3]. However, to be able to create 3D models with the existing Sweep Sensors, a Sweep 3D Scanning Kit is required [4]. The project funded the purchase of 4 Sweep 3D Scanning Kits.

Implementation

With the help of JMU Innovation Services and staff in School of Integrated Science, we have assembled 8 Sweep 3D Scanning Kits.

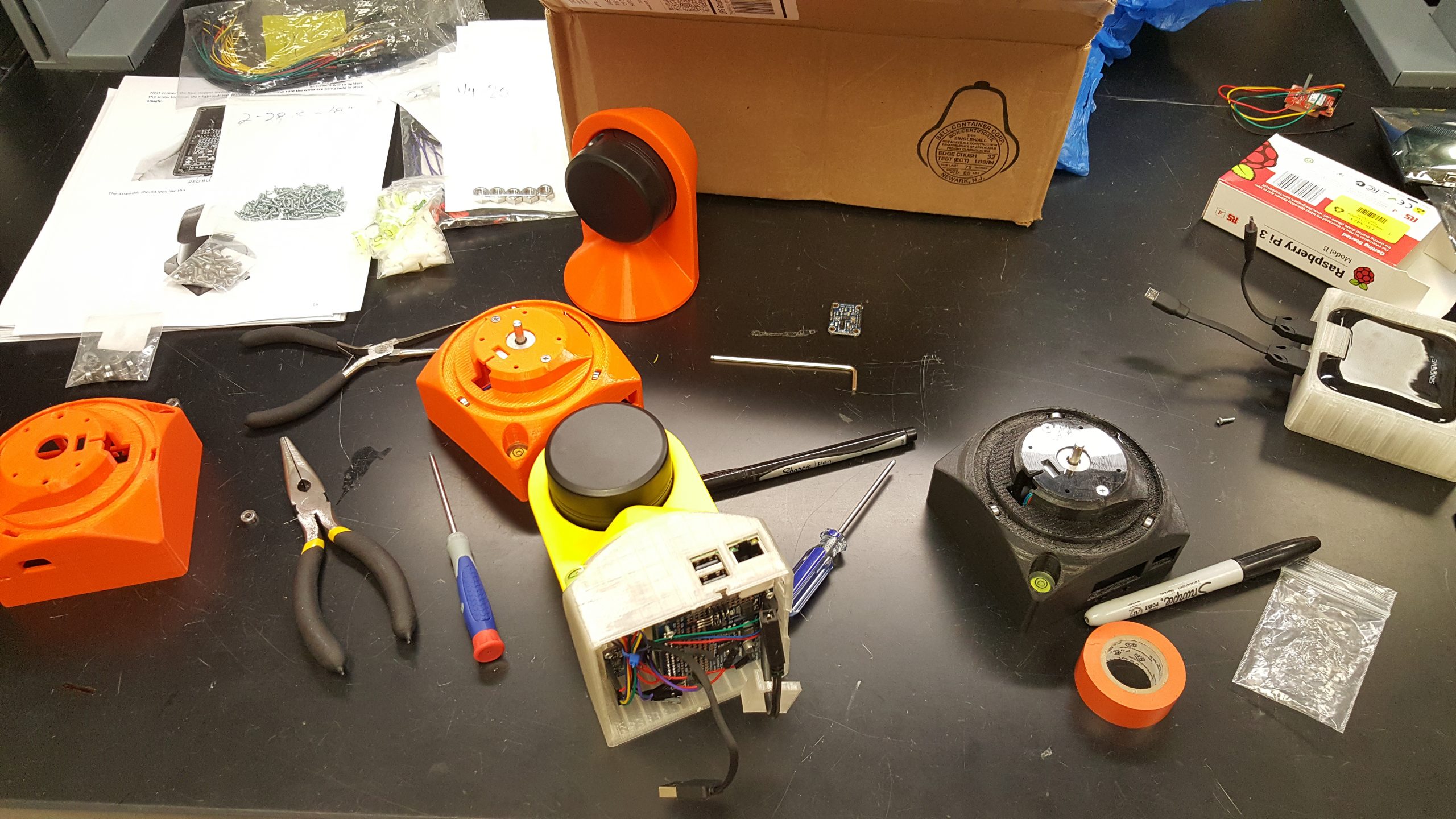

The project was implemented in two consecutive courses: IA 340 Data Mining (2017 Fall Semester) and IA 342 Data Visualization (2018 Spring Semester). In the IA 340 Course, students learned the basic concepts of LiDAR data and created 2D LiDAR points with the 2D Sweep Sensors. In the IA 342 Course, students spent one week to use the upgraded 3D Sweep Sensors to collect 3D LiDAR points of buildings in JMU and created 3D models from the collected LiDAR data.

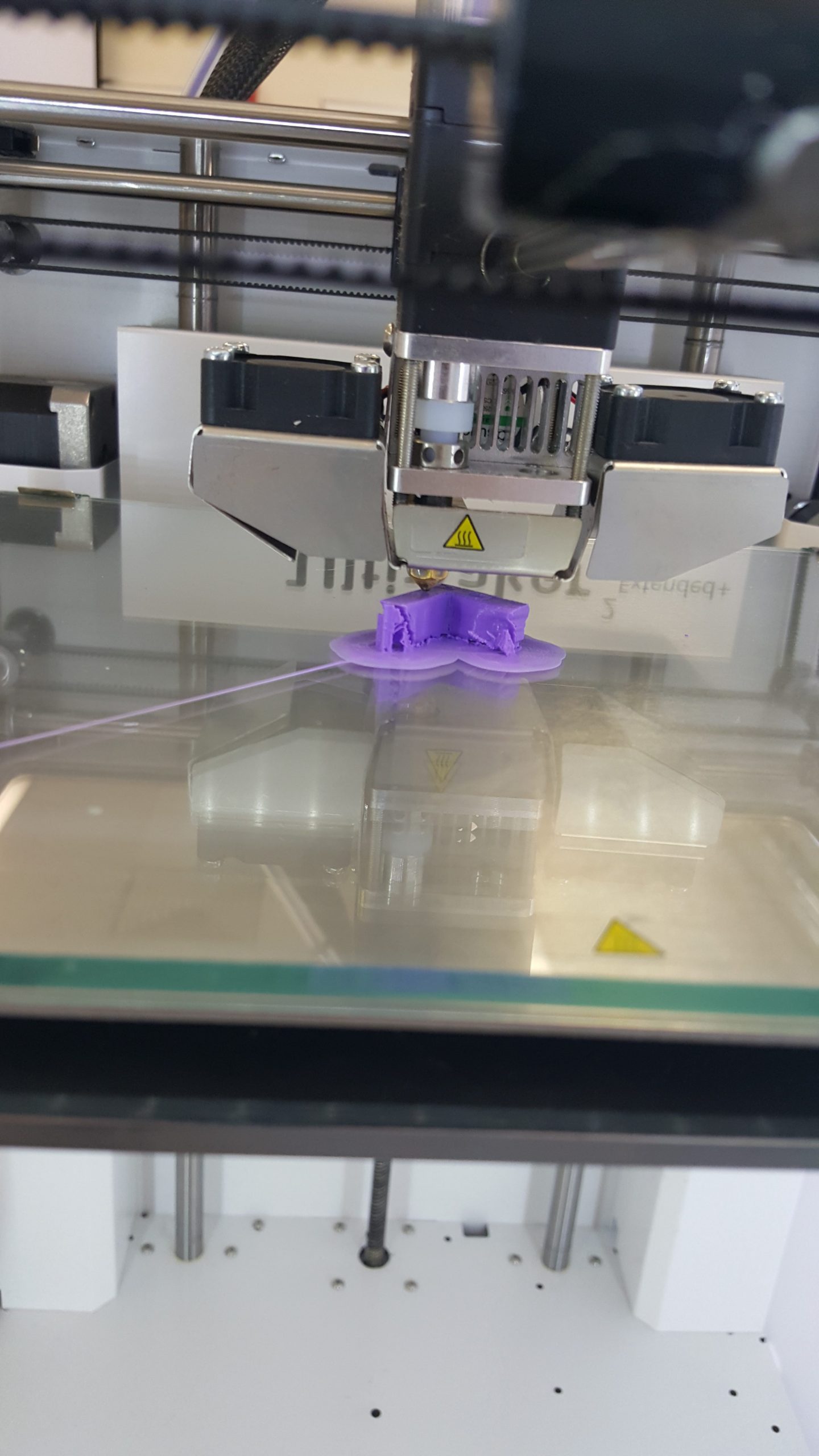

In the following week, students brought their created 3D model in JMU 3Space Classroom to print out their models with 3D printers.

This project can also be taught in GS 469 course (Application of GIS) in a compact version because GS students already know the basics of LiDAR data.

We are planning to offer an advanced Machine Learning class for IA, GS and CS students in 2018 Fall Semester where we will introduce Machine Learning models and how to apply those models to differentiate human movements from the LiDAR points. The Sweep 3D Scanning Kit includes Raspberry Pi that allows students analyze LiDAR points in the real-time.

Outcome

Upon finishing the Data Mining and Data Visualization class, students have had a better understanding of the concept of LiDAR data, 3D modeling creation, and 3D printing. Most of the students had successfully created 3D models with LiDAR data and printed out their 3D models with 3D printers.

Such knowledge and experience are important for students to enhance their understanding of 3D visualization, and enrich their practice of using sensors and 3D printings.

Upon finishing the Machine Learning class, students can develop their program in the Raspberry Pi to extract and monitor human indoor movements from the collected LiDAR data in real-time. Students can further polish their program in Capstone projects and present their result in ISAT senior colloquium. Such technique can be applied in security monitoring, indoor survey, auto driving and many other areas.

Tutorial

Below is the tutorial of how to obtaining 3D points from our DIY 3D scanners and create 3D models for 3D printers.

Reference

[1] X. Wei and X. Yao, “3D Model Construction in an Urban Environment from Sparse LiDAR Points and Aerial Photos – a Statistical Approach,” Geomatica, vol. 69, no. 3, pp. 271–284, 2015.

[2] X. Wei and X. Yao, “A Hybrid GWR-Based Height Estimation Method for Building Detection in Urban Environments,” ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci., vol. II–2, pp. 23–29, Nov. 2014.

[3] “Science | Meet Sweep. An Affordable Scanning LiDAR for Everyone.” [Online]. Available: http://scanse.io. [Accessed: 11-Oct-2017].

[4] “Sweep 3D Scanning Kit.” [Online]. Available: http://scanse.io/3d-scanning-kit/. [Accessed: 12-Oct-2017].